2019-10-30 21:33:10 +01:00

|

|

|

// Copyright 2019 The Gitea Authors. All rights reserved.

|

2022-11-27 19:20:29 +01:00

|

|

|

// SPDX-License-Identifier: MIT

|

2019-10-30 21:33:10 +01:00

|

|

|

|

2022-06-13 11:37:59 +02:00

|

|

|

package issues

|

2019-10-30 21:33:10 +01:00

|

|

|

|

|

|

|

|

import (

|

2022-04-28 13:48:48 +02:00

|

|

|

"context"

|

2019-10-30 21:33:10 +01:00

|

|

|

"fmt"

|

|

|

|

|

|

2021-09-19 13:49:59 +02:00

|

|

|

"code.gitea.io/gitea/models/db"

|

2022-05-11 12:09:36 +02:00

|

|

|

access_model "code.gitea.io/gitea/models/perm/access"

|

2024-05-31 14:10:11 +02:00

|

|

|

repo_model "code.gitea.io/gitea/models/repo"

|

2022-05-11 12:09:36 +02:00

|

|

|

"code.gitea.io/gitea/models/unit"

|

2021-11-24 10:49:20 +01:00

|

|

|

user_model "code.gitea.io/gitea/models/user"

|

2024-05-31 14:10:11 +02:00

|

|

|

"code.gitea.io/gitea/modules/container"

|

2019-10-30 21:33:10 +01:00

|

|

|

"code.gitea.io/gitea/modules/log"

|

2023-02-05 12:57:38 +01:00

|

|

|

"code.gitea.io/gitea/modules/util"

|

2019-12-07 03:44:10 +01:00

|

|

|

|

2024-12-10 22:15:06 +01:00

|

|

|

"xorm.io/builder"

|

2019-10-30 21:33:10 +01:00

|

|

|

"xorm.io/xorm"

|

|

|

|

|

)

|

|

|

|

|

|

|

|

|

|

// PullRequestsOptions holds the options for PRs

|

|

|

|

|

type PullRequestsOptions struct {

|

2021-09-24 13:32:56 +02:00

|

|

|

db.ListOptions

|

2019-10-30 21:33:10 +01:00

|

|

|

State string

|

|

|

|

|

SortType string

|

2024-03-21 16:07:35 +01:00

|

|

|

Labels []int64

|

2019-10-30 21:33:10 +01:00

|

|

|

MilestoneID int64

|

2024-10-07 23:21:07 +02:00

|

|

|

PosterID int64

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

2024-06-11 20:47:45 +02:00

|

|

|

func listPullRequestStatement(ctx context.Context, baseRepoID int64, opts *PullRequestsOptions) *xorm.Session {

|

2023-09-15 08:13:19 +02:00

|

|

|

sess := db.GetEngine(ctx).Where("pull_request.base_repo_id=?", baseRepoID)

|

2019-10-30 21:33:10 +01:00

|

|

|

|

|

|

|

|

sess.Join("INNER", "issue", "pull_request.issue_id = issue.id")

|

|

|

|

|

switch opts.State {

|

|

|

|

|

case "closed", "open":

|

|

|

|

|

sess.And("issue.is_closed=?", opts.State == "closed")

|

|

|

|

|

}

|

|

|

|

|

|

2024-03-21 16:07:35 +01:00

|

|

|

if len(opts.Labels) > 0 {

|

2019-10-30 21:33:10 +01:00

|

|

|

sess.Join("INNER", "issue_label", "issue.id = issue_label.issue_id").

|

2024-03-21 16:07:35 +01:00

|

|

|

In("issue_label.label_id", opts.Labels)

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if opts.MilestoneID > 0 {

|

|

|

|

|

sess.And("issue.milestone_id=?", opts.MilestoneID)

|

|

|

|

|

}

|

|

|

|

|

|

2024-10-07 23:21:07 +02:00

|

|

|

if opts.PosterID > 0 {

|

|

|

|

|

sess.And("issue.poster_id=?", opts.PosterID)

|

|

|

|

|

}

|

|

|

|

|

|

2024-06-11 20:47:45 +02:00

|

|

|

return sess

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// GetUnmergedPullRequestsByHeadInfo returns all pull requests that are open and has not been merged

|

2023-07-22 16:14:27 +02:00

|

|

|

func GetUnmergedPullRequestsByHeadInfo(ctx context.Context, repoID int64, branch string) ([]*PullRequest, error) {

|

2019-10-30 21:33:10 +01:00

|

|

|

prs := make([]*PullRequest, 0, 2)

|

2023-07-22 16:14:27 +02:00

|

|

|

sess := db.GetEngine(ctx).

|

2019-10-30 21:33:10 +01:00

|

|

|

Join("INNER", "issue", "issue.id = pull_request.issue_id").

|

2023-05-08 08:39:32 +02:00

|

|

|

Where("head_repo_id = ? AND head_branch = ? AND has_merged = ? AND issue.is_closed = ? AND flow = ?", repoID, branch, false, false, PullRequestFlowGithub)

|

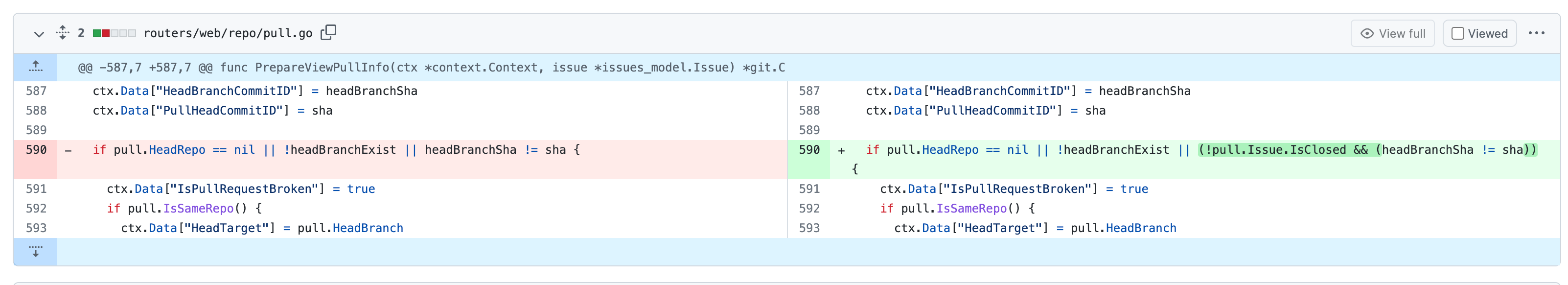

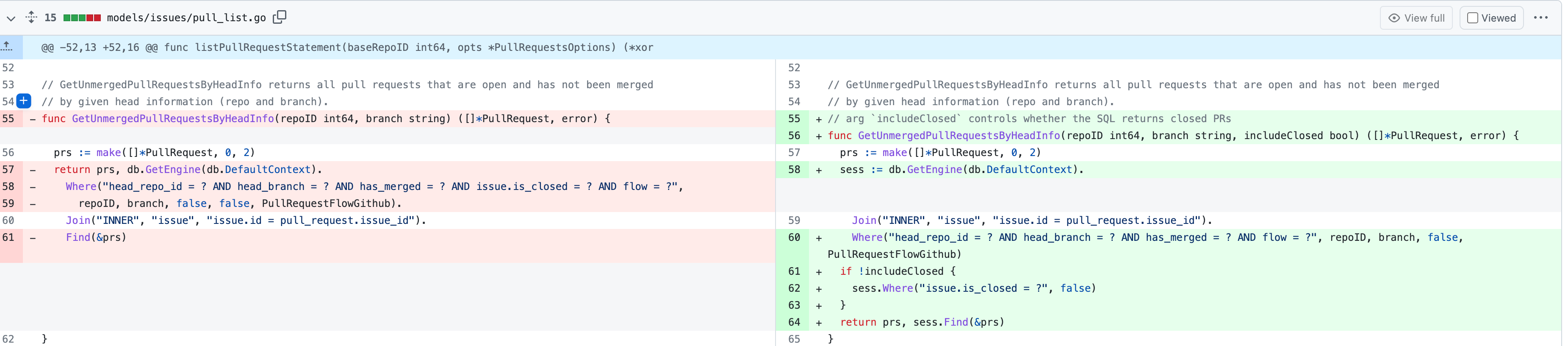

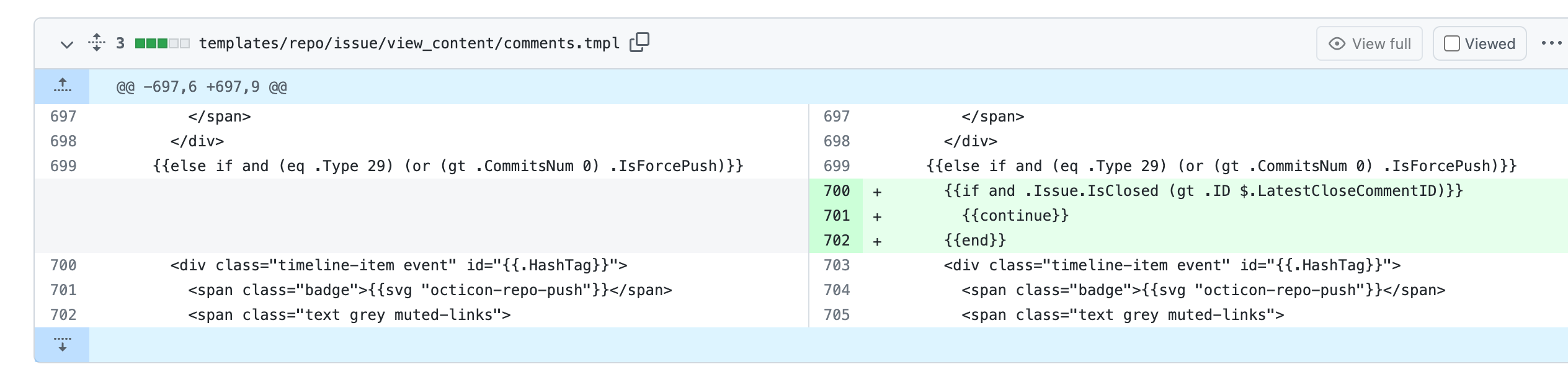

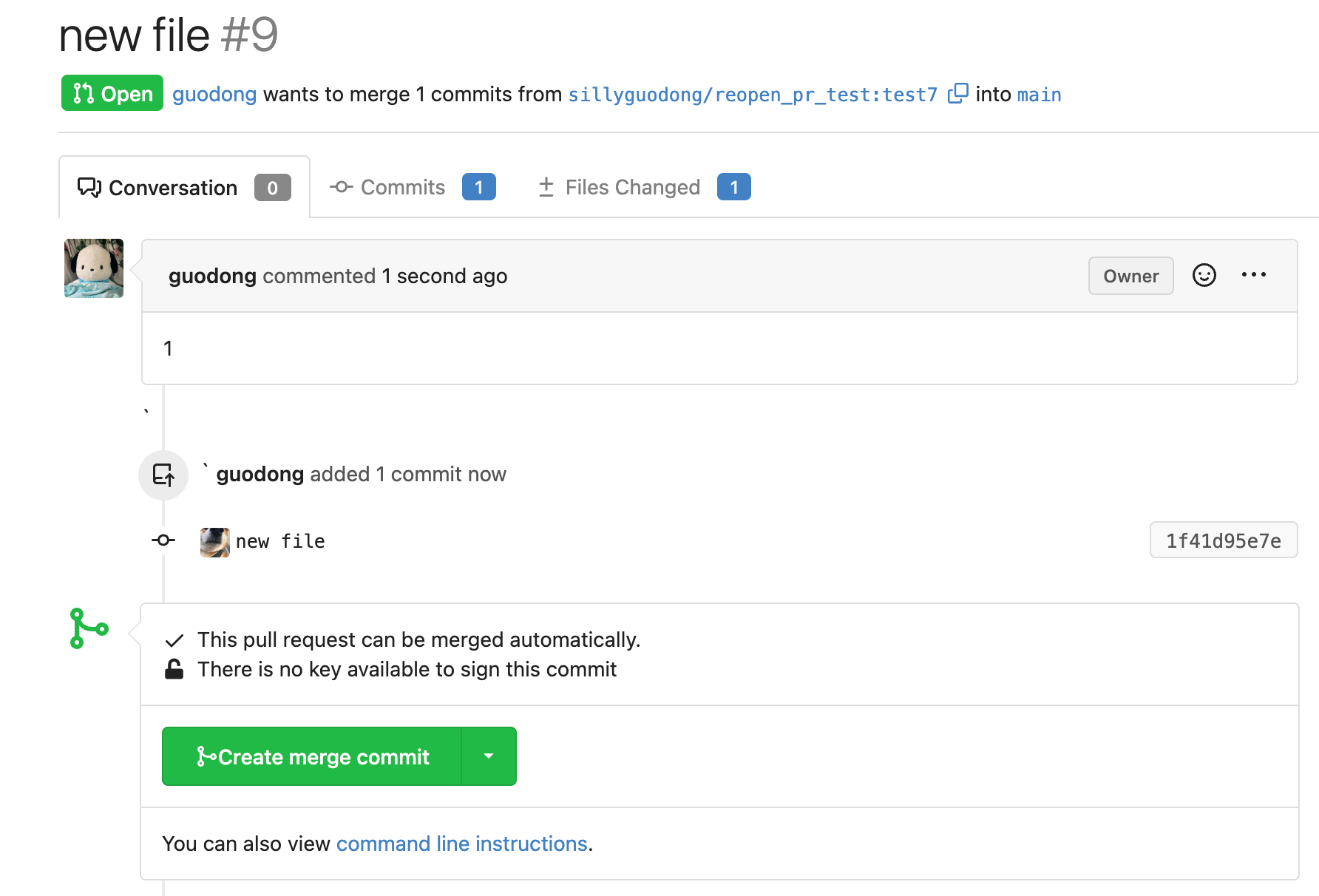

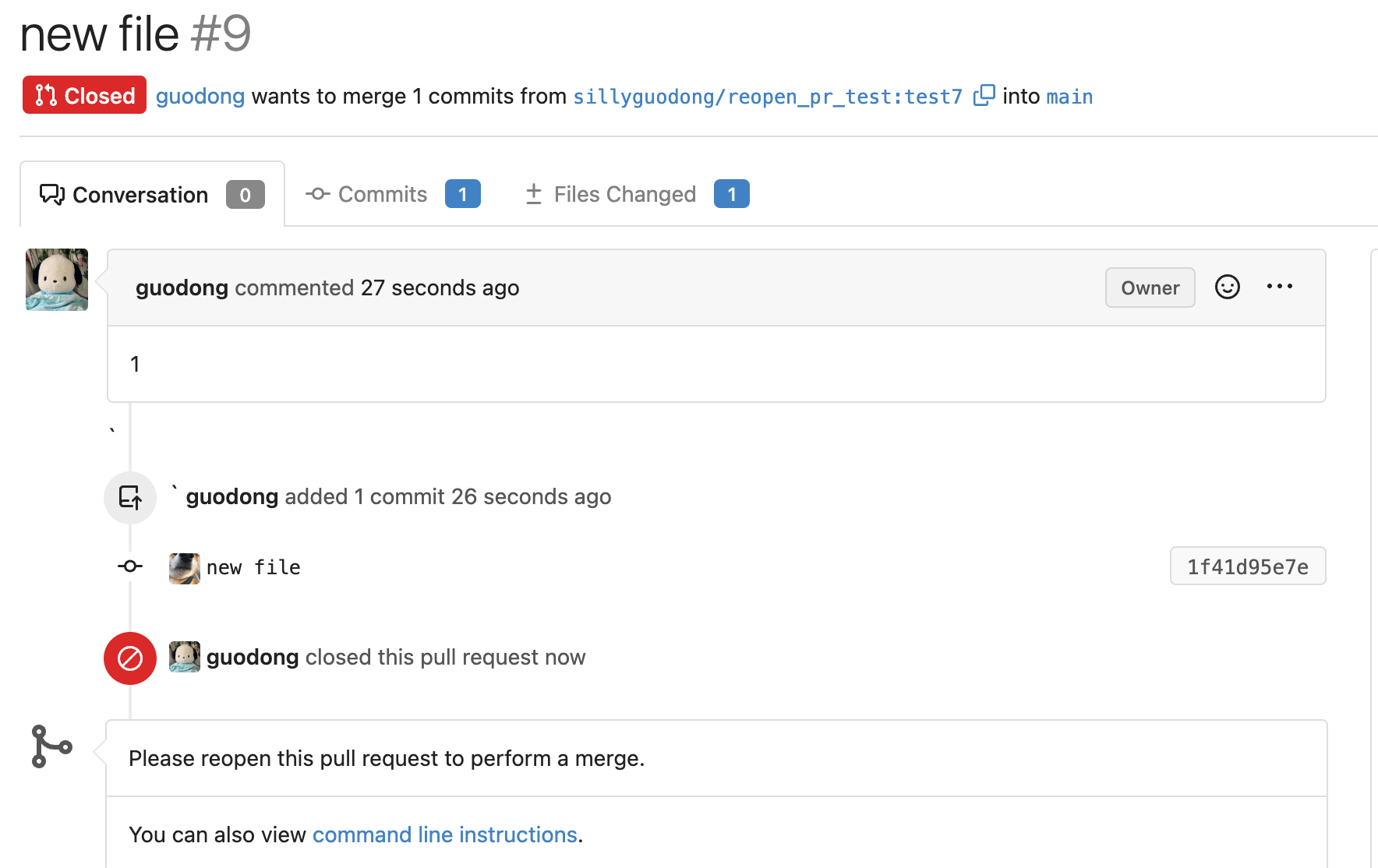

Fix cannot reopen after pushing commits to a closed PR (#23189)

Close: #22784

1. On GH, we can reopen a PR which was closed before after pushing

commits. After reopening PR, we can see the commits that were pushed

after closing PR in the time line. So the case of

[issue](https://github.com/go-gitea/gitea/issues/22784) is a bug which

needs to be fixed.

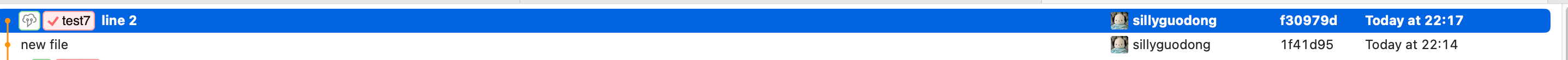

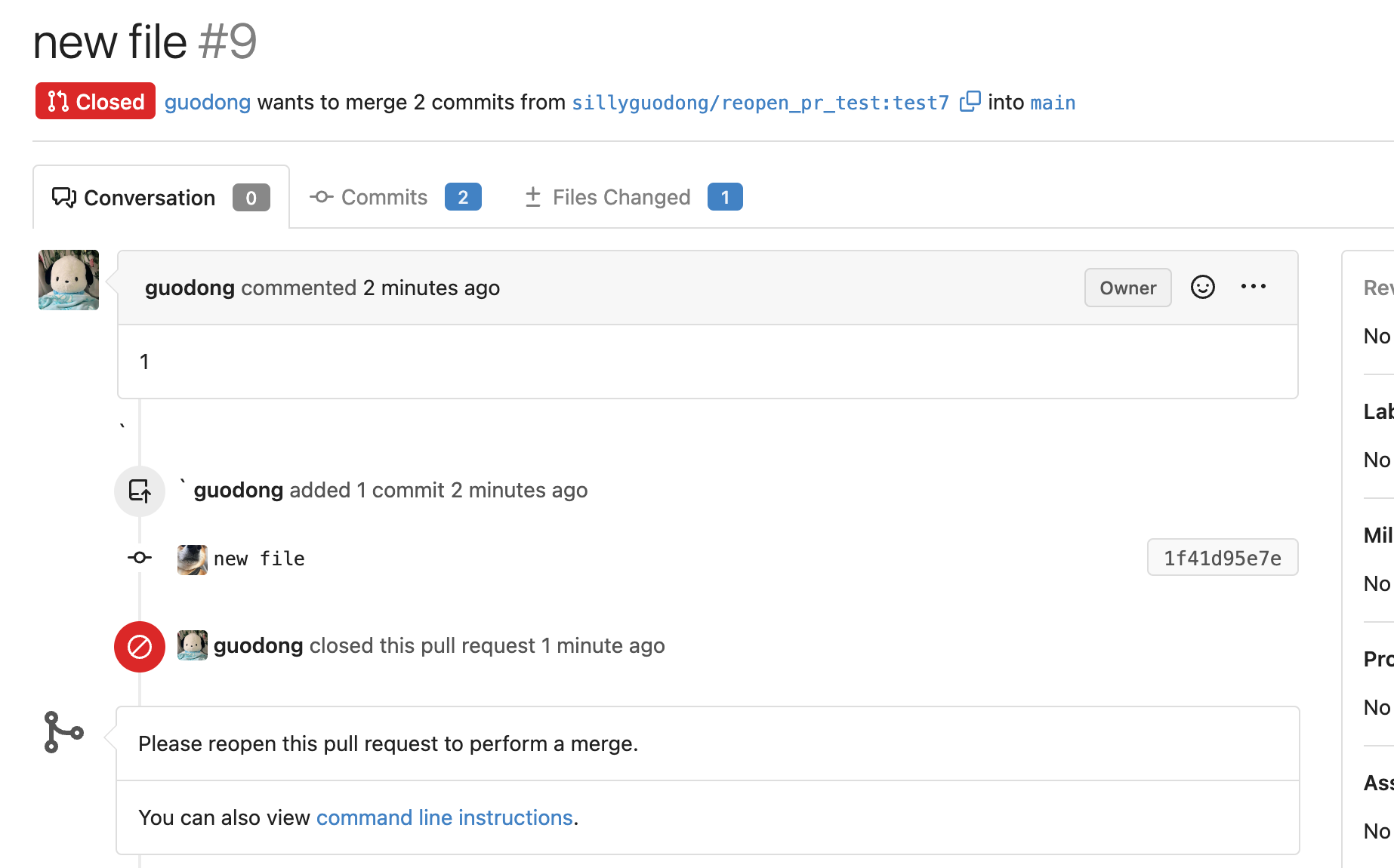

2. After closing a PR and pushing commits, `headBranchSha` is not equal

to `sha`(which is the last commit ID string of reference). If the

judgement exists, the button of reopen will not display. So, skip the

judgement if the status of PR is closed.

3. Even if PR is already close, we should still insert comment record

into DB when we push commits.

So we should still call function `CreatePushPullComment()`.

https://github.com/go-gitea/gitea/blob/067b0c2664d127c552ccdfd264257caca4907a77/services/pull/pull.go#L260-L282

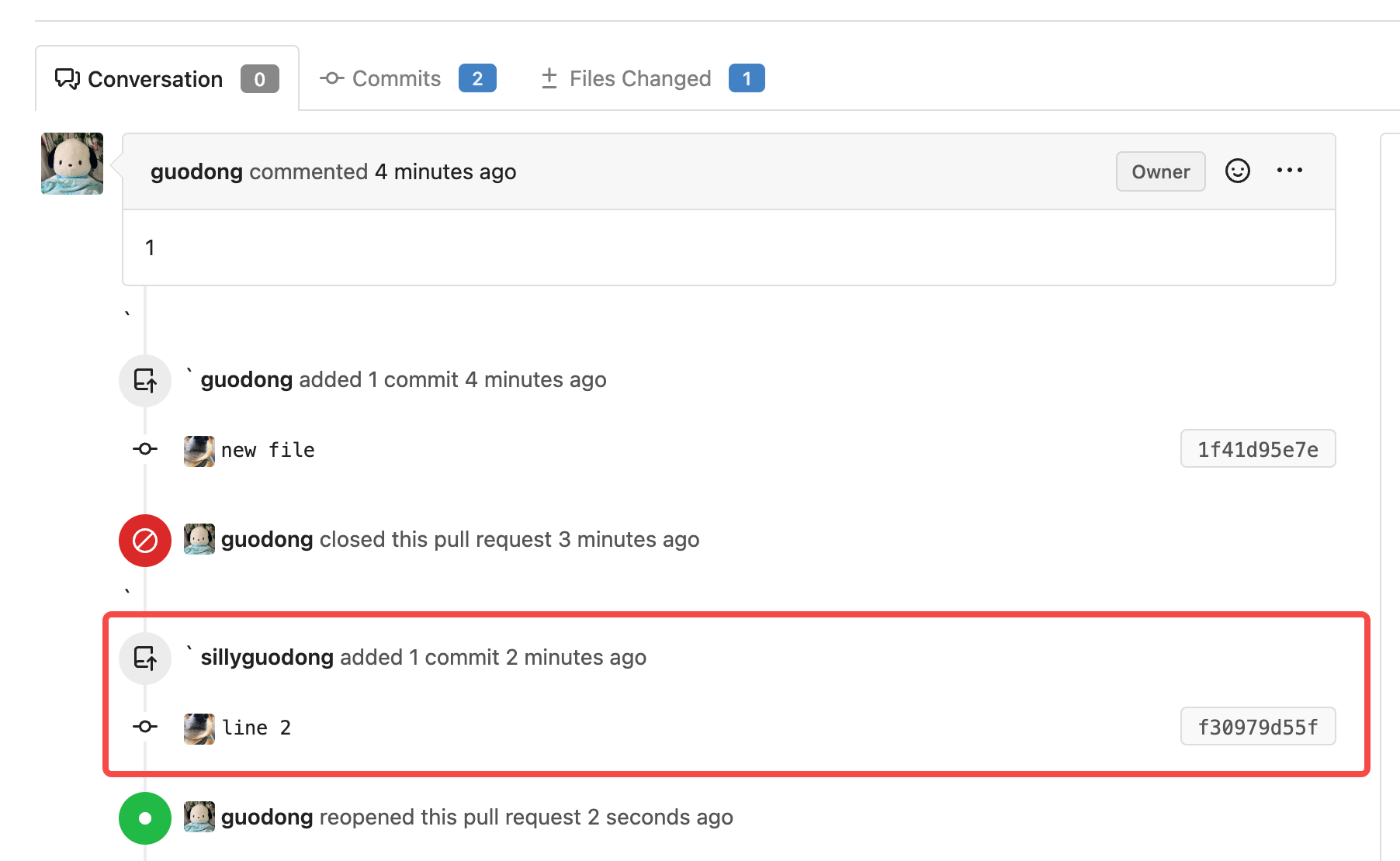

So, I add a switch(`includeClosed`) to the

`GetUnmergedPullRequestsByHeadInfo` func to control whether the status

of PR must be open. In this case, by setting `includeClosed` to `true`,

we can query the closed PR.

4. In the loop of comments, I use the`latestCloseCommentID` variable to

record the last occurrence of the close comment.

In the go template, if the status of PR is closed, the comments whose

type is `CommentTypePullRequestPush(29)` after `latestCloseCommentID`

won't be rendered.

e.g.

1). The initial status of the PR is opened.

2). Then I click the button of `Close`. PR is closed now.

3). I try to push a commit to this PR, even though its current status is

closed.

But in comments list, this commit do not display.This is as expected :)

4). Click the `Reopen` button, the commit which is pushed after closing

PR display now.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

2023-03-03 14:16:58 +01:00

|

|

|

return prs, sess.Find(&prs)

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

Fix various typos (#20338)

* Fix various typos

Found via `codespell -q 3 -S ./options/locale,./options/license,./public/vendor -L actived,allways,attachements,ba,befores,commiter,pullrequest,pullrequests,readby,splitted,te,unknwon`

Co-authored-by: zeripath <art27@cantab.net>

2022-07-12 23:32:37 +02:00

|

|

|

// CanMaintainerWriteToBranch check whether user is a maintainer and could write to the branch

|

2023-07-22 16:14:27 +02:00

|

|

|

func CanMaintainerWriteToBranch(ctx context.Context, p access_model.Permission, branch string, user *user_model.User) bool {

|

2022-05-11 12:09:36 +02:00

|

|

|

if p.CanWrite(unit.TypeCode) {

|

|

|

|

|

return true

|

|

|

|

|

}

|

|

|

|

|

|

2024-04-17 17:58:37 +02:00

|

|

|

// the code below depends on units to get the repository ID, not ideal but just keep it for now

|

|

|

|

|

firstUnitRepoID := p.GetFirstUnitRepoID()

|

|

|

|

|

if firstUnitRepoID == 0 {

|

2022-05-11 12:09:36 +02:00

|

|

|

return false

|

|

|

|

|

}

|

|

|

|

|

|

2024-04-17 17:58:37 +02:00

|

|

|

prs, err := GetUnmergedPullRequestsByHeadInfo(ctx, firstUnitRepoID, branch)

|

2022-05-11 12:09:36 +02:00

|

|

|

if err != nil {

|

|

|

|

|

return false

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

for _, pr := range prs {

|

|

|

|

|

if pr.AllowMaintainerEdit {

|

2023-07-22 16:14:27 +02:00

|

|

|

err = pr.LoadBaseRepo(ctx)

|

2022-05-11 12:09:36 +02:00

|

|

|

if err != nil {

|

|

|

|

|

continue

|

|

|

|

|

}

|

2023-07-22 16:14:27 +02:00

|

|

|

prPerm, err := access_model.GetUserRepoPermission(ctx, pr.BaseRepo, user)

|

2022-05-11 12:09:36 +02:00

|

|

|

if err != nil {

|

|

|

|

|

continue

|

|

|

|

|

}

|

|

|

|

|

if prPerm.CanWrite(unit.TypeCode) {

|

|

|

|

|

return true

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

return false

|

|

|

|

|

}

|

|

|

|

|

|

2022-01-03 20:45:58 +01:00

|

|

|

// HasUnmergedPullRequestsByHeadInfo checks if there are open and not merged pull request

|

|

|

|

|

// by given head information (repo and branch)

|

2022-05-03 21:46:28 +02:00

|

|

|

func HasUnmergedPullRequestsByHeadInfo(ctx context.Context, repoID int64, branch string) (bool, error) {

|

|

|

|

|

return db.GetEngine(ctx).

|

2022-01-03 20:45:58 +01:00

|

|

|

Where("head_repo_id = ? AND head_branch = ? AND has_merged = ? AND issue.is_closed = ? AND flow = ?",

|

|

|

|

|

repoID, branch, false, false, PullRequestFlowGithub).

|

|

|

|

|

Join("INNER", "issue", "issue.id = pull_request.issue_id").

|

|

|

|

|

Exist(&PullRequest{})

|

|

|

|

|

}

|

|

|

|

|

|

2019-10-30 21:33:10 +01:00

|

|

|

// GetUnmergedPullRequestsByBaseInfo returns all pull requests that are open and has not been merged

|

|

|

|

|

// by given base information (repo and branch).

|

2023-07-22 16:14:27 +02:00

|

|

|

func GetUnmergedPullRequestsByBaseInfo(ctx context.Context, repoID int64, branch string) ([]*PullRequest, error) {

|

2019-10-30 21:33:10 +01:00

|

|

|

prs := make([]*PullRequest, 0, 2)

|

2023-07-22 16:14:27 +02:00

|

|

|

return prs, db.GetEngine(ctx).

|

2019-10-30 21:33:10 +01:00

|

|

|

Where("base_repo_id=? AND base_branch=? AND has_merged=? AND issue.is_closed=?",

|

|

|

|

|

repoID, branch, false, false).

|

2023-03-01 20:14:02 +01:00

|

|

|

OrderBy("issue.updated_unix DESC").

|

2019-10-30 21:33:10 +01:00

|

|

|

Join("INNER", "issue", "issue.id=pull_request.issue_id").

|

|

|

|

|

Find(&prs)

|

|

|

|

|

}

|

|

|

|

|

|

2019-12-15 10:51:28 +01:00

|

|

|

// GetPullRequestIDsByCheckStatus returns all pull requests according the special checking status.

|

2023-09-15 08:13:19 +02:00

|

|

|

func GetPullRequestIDsByCheckStatus(ctx context.Context, status PullRequestStatus) ([]int64, error) {

|

2019-12-15 10:51:28 +01:00

|

|

|

prs := make([]int64, 0, 10)

|

2023-09-15 08:13:19 +02:00

|

|

|

return prs, db.GetEngine(ctx).Table("pull_request").

|

2019-12-07 03:44:10 +01:00

|

|

|

Where("status=?", status).

|

2019-12-15 10:51:28 +01:00

|

|

|

Cols("pull_request.id").

|

2019-12-07 03:44:10 +01:00

|

|

|

Find(&prs)

|

|

|

|

|

}

|

|

|

|

|

|

2019-10-30 21:33:10 +01:00

|

|

|

// PullRequests returns all pull requests for a base Repo by the given conditions

|

2024-05-31 14:10:11 +02:00

|

|

|

func PullRequests(ctx context.Context, baseRepoID int64, opts *PullRequestsOptions) (PullRequestList, int64, error) {

|

2019-10-30 21:33:10 +01:00

|

|

|

if opts.Page <= 0 {

|

|

|

|

|

opts.Page = 1

|

|

|

|

|

}

|

|

|

|

|

|

2024-06-11 20:47:45 +02:00

|

|

|

countSession := listPullRequestStatement(ctx, baseRepoID, opts)

|

2019-10-30 21:33:10 +01:00

|

|

|

maxResults, err := countSession.Count(new(PullRequest))

|

|

|

|

|

if err != nil {

|

|

|

|

|

log.Error("Count PRs: %v", err)

|

|

|

|

|

return nil, maxResults, err

|

|

|

|

|

}

|

|

|

|

|

|

2024-06-11 20:47:45 +02:00

|

|

|

findSession := listPullRequestStatement(ctx, baseRepoID, opts)

|

2023-05-18 12:45:25 +02:00

|

|

|

applySorts(findSession, opts.SortType, 0)

|

2021-09-24 13:32:56 +02:00

|

|

|

findSession = db.SetSessionPagination(findSession, opts)

|

2020-01-24 20:00:29 +01:00

|

|

|

prs := make([]*PullRequest, 0, opts.PageSize)

|

2019-10-30 21:33:10 +01:00

|

|

|

return prs, maxResults, findSession.Find(&prs)

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// PullRequestList defines a list of pull requests

|

|

|

|

|

type PullRequestList []*PullRequest

|

|

|

|

|

|

2024-05-31 14:10:11 +02:00

|

|

|

func (prs PullRequestList) getRepositoryIDs() []int64 {

|

|

|

|

|

repoIDs := make(container.Set[int64])

|

|

|

|

|

for _, pr := range prs {

|

|

|

|

|

if pr.BaseRepo == nil && pr.BaseRepoID > 0 {

|

|

|

|

|

repoIDs.Add(pr.BaseRepoID)

|

|

|

|

|

}

|

|

|

|

|

if pr.HeadRepo == nil && pr.HeadRepoID > 0 {

|

|

|

|

|

repoIDs.Add(pr.HeadRepoID)

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

return repoIDs.Values()

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

func (prs PullRequestList) LoadRepositories(ctx context.Context) error {

|

|

|

|

|

repoIDs := prs.getRepositoryIDs()

|

|

|

|

|

reposMap := make(map[int64]*repo_model.Repository, len(repoIDs))

|

|

|

|

|

if err := db.GetEngine(ctx).

|

|

|

|

|

In("id", repoIDs).

|

|

|

|

|

Find(&reposMap); err != nil {

|

|

|

|

|

return fmt.Errorf("find repos: %w", err)

|

|

|

|

|

}

|

|

|

|

|

for _, pr := range prs {

|

|

|

|

|

if pr.BaseRepo == nil {

|

|

|

|

|

pr.BaseRepo = reposMap[pr.BaseRepoID]

|

|

|

|

|

}

|

|

|

|

|

if pr.HeadRepo == nil {

|

|

|

|

|

pr.HeadRepo = reposMap[pr.HeadRepoID]

|

|

|

|

|

pr.isHeadRepoLoaded = true

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2023-07-22 16:14:27 +02:00

|

|

|

func (prs PullRequestList) LoadAttributes(ctx context.Context) error {

|

2024-05-31 14:10:11 +02:00

|

|

|

if _, err := prs.LoadIssues(ctx); err != nil {

|

|

|

|

|

return err

|

|

|

|

|

}

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

func (prs PullRequestList) LoadIssues(ctx context.Context) (IssueList, error) {

|

2019-10-30 21:33:10 +01:00

|

|

|

if len(prs) == 0 {

|

2024-05-31 14:10:11 +02:00

|

|

|

return nil, nil

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

2024-06-13 11:42:07 +02:00

|

|

|

// Load issues which are not loaded

|

|

|

|

|

issueIDs := container.FilterSlice(prs, func(pr *PullRequest) (int64, bool) {

|

|

|

|

|

return pr.IssueID, pr.Issue == nil && pr.IssueID > 0

|

|

|

|

|

})

|

2024-05-31 14:10:11 +02:00

|

|

|

issues := make(map[int64]*Issue, len(issueIDs))

|

2022-05-20 16:08:52 +02:00

|

|

|

if err := db.GetEngine(ctx).

|

2019-10-30 21:33:10 +01:00

|

|

|

In("id", issueIDs).

|

|

|

|

|

Find(&issues); err != nil {

|

2024-05-31 14:10:11 +02:00

|

|

|

return nil, fmt.Errorf("find issues: %w", err)

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

2024-05-31 14:10:11 +02:00

|

|

|

issueList := make(IssueList, 0, len(prs))

|

2023-02-02 04:49:28 +01:00

|

|

|

for _, pr := range prs {

|

2023-02-05 12:57:38 +01:00

|

|

|

if pr.Issue == nil {

|

2024-05-31 14:10:11 +02:00

|

|

|

pr.Issue = issues[pr.IssueID]

|

|

|

|

|

/*

|

|

|

|

|

Old code:

|

|

|

|

|

pr.Issue.PullRequest = pr // panic here means issueIDs and prs are not in sync

|

|

|

|

|

|

|

|

|

|

It's worth panic because it's almost impossible to happen under normal use.

|

|

|

|

|

But in integration testing, an asynchronous task could read a database that has been reset.

|

|

|

|

|

So returning an error would make more sense, let the caller has a choice to ignore it.

|

|

|

|

|

*/

|

|

|

|

|

if pr.Issue == nil {

|

|

|

|

|

return nil, fmt.Errorf("issues and prs may be not in sync: cannot find issue %v for pr %v: %w", pr.IssueID, pr.ID, util.ErrNotExist)

|

|

|

|

|

}

|

2023-02-05 12:57:38 +01:00

|

|

|

}

|

2023-02-05 15:24:43 +01:00

|

|

|

pr.Issue.PullRequest = pr

|

2024-05-31 14:10:11 +02:00

|

|

|

if pr.Issue.Repo == nil {

|

|

|

|

|

pr.Issue.Repo = pr.BaseRepo

|

|

|

|

|

}

|

|

|

|

|

issueList = append(issueList, pr.Issue)

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

2024-05-31 14:10:11 +02:00

|

|

|

return issueList, nil

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

|

|

|

|

|

2023-01-17 22:03:44 +01:00

|

|

|

// GetIssueIDs returns all issue ids

|

|

|

|

|

func (prs PullRequestList) GetIssueIDs() []int64 {

|

2024-05-31 14:10:11 +02:00

|

|

|

return container.FilterSlice(prs, func(pr *PullRequest) (int64, bool) {

|

2024-06-13 11:42:07 +02:00

|

|

|

return pr.IssueID, pr.IssueID > 0

|

2024-05-31 14:10:11 +02:00

|

|

|

})

|

2019-10-30 21:33:10 +01:00

|

|

|

}

|

2023-08-24 07:06:17 +02:00

|

|

|

|

2024-12-10 22:15:06 +01:00

|

|

|

func (prs PullRequestList) LoadReviewCommentsCounts(ctx context.Context) (map[int64]int, error) {

|

|

|

|

|

issueIDs := prs.GetIssueIDs()

|

|

|

|

|

countsMap := make(map[int64]int, len(issueIDs))

|

|

|

|

|

counts := make([]struct {

|

|

|

|

|

IssueID int64

|

|

|

|

|

Count int

|

|

|

|

|

}, 0, len(issueIDs))

|

|

|

|

|

if err := db.GetEngine(ctx).Select("issue_id, count(*) as count").

|

|

|

|

|

Table("comment").In("issue_id", issueIDs).And("type = ?", CommentTypeReview).

|

|

|

|

|

GroupBy("issue_id").Find(&counts); err != nil {

|

|

|

|

|

return nil, err

|

|

|

|

|

}

|

|

|

|

|

for _, c := range counts {

|

|

|

|

|

countsMap[c.IssueID] = c.Count

|

|

|

|

|

}

|

|

|

|

|

return countsMap, nil

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

func (prs PullRequestList) LoadReviews(ctx context.Context) (ReviewList, error) {

|

|

|

|

|

issueIDs := prs.GetIssueIDs()

|

|

|

|

|

reviews := make([]*Review, 0, len(issueIDs))

|

|

|

|

|

|

|

|

|

|

subQuery := builder.Select("max(id) as id").

|

|

|

|

|

From("review").

|

|

|

|

|

Where(builder.In("issue_id", issueIDs)).

|

|

|

|

|

And(builder.In("`type`", ReviewTypeApprove, ReviewTypeReject, ReviewTypeRequest)).

|

|

|

|

|

And(builder.Eq{

|

|

|

|

|

"dismissed": false,

|

|

|

|

|

"original_author_id": 0,

|

|

|

|

|

"reviewer_team_id": 0,

|

|

|

|

|

}).

|

|

|

|

|

GroupBy("issue_id, reviewer_id")

|

|

|

|

|

// Get latest review of each reviewer, sorted in order they were made

|

|

|

|

|

if err := db.GetEngine(ctx).In("id", subQuery).OrderBy("review.updated_unix ASC").Find(&reviews); err != nil {

|

|

|

|

|

return nil, err

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

teamReviewRequests := make([]*Review, 0, 5)

|

|

|

|

|

subQueryTeam := builder.Select("max(id) as id").

|

|

|

|

|

From("review").

|

|

|

|

|

Where(builder.In("issue_id", issueIDs)).

|

|

|

|

|

And(builder.Eq{

|

|

|

|

|

"original_author_id": 0,

|

|

|

|

|

}).And(builder.Neq{

|

|

|

|

|

"reviewer_team_id": 0,

|

|

|

|

|

}).

|

|

|

|

|

GroupBy("issue_id, reviewer_team_id")

|

|

|

|

|

if err := db.GetEngine(ctx).In("id", subQueryTeam).OrderBy("review.updated_unix ASC").Find(&teamReviewRequests); err != nil {

|

|

|

|

|

return nil, err

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if len(teamReviewRequests) > 0 {

|

|

|

|

|

reviews = append(reviews, teamReviewRequests...)

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

return reviews, nil

|

|

|

|

|

}

|

|

|

|

|

|

2023-08-24 07:06:17 +02:00

|

|

|

// HasMergedPullRequestInRepo returns whether the user(poster) has merged pull-request in the repo

|

|

|

|

|

func HasMergedPullRequestInRepo(ctx context.Context, repoID, posterID int64) (bool, error) {

|

|

|

|

|

return db.GetEngine(ctx).

|

|

|

|

|

Join("INNER", "pull_request", "pull_request.issue_id = issue.id").

|

|

|

|

|

Where("repo_id=?", repoID).

|

|

|

|

|

And("poster_id=?", posterID).

|

|

|

|

|

And("is_pull=?", true).

|

|

|

|

|

And("pull_request.has_merged=?", true).

|

|

|

|

|

Select("issue.id").

|

|

|

|

|

Limit(1).

|

|

|

|

|

Get(new(Issue))

|

|

|

|

|

}

|

2024-03-21 14:13:08 +01:00

|

|

|

|

|

|

|

|

// GetPullRequestByIssueIDs returns all pull requests by issue ids

|

|

|

|

|

func GetPullRequestByIssueIDs(ctx context.Context, issueIDs []int64) (PullRequestList, error) {

|

|

|

|

|

prs := make([]*PullRequest, 0, len(issueIDs))

|

|

|

|

|

return prs, db.GetEngine(ctx).

|

|

|

|

|

Where("issue_id > 0").

|

|

|

|

|

In("issue_id", issueIDs).

|

|

|

|

|

Find(&prs)

|

|

|

|

|

}

|